Despite that we have said quite a lot about the origin of the 1st person perspective, memory, the mind’s eye, free will, and the nature of life itself, we have not said anything about why certain experiences feel pleasant (good) and others unpleasant (bad). Why should anything feel pleasant? Why is there pain? Why are there qualia to experience? Sure, there are studies showing that certain chemicals like serotonin and dopamine are correlated with positive moods and good feelings. They may even be an essential part of some causal chain. But, still we are left asking the question: why does the chemical serotonin feel good? Why should any chemical have any bearing on our feelings? If the 1st/3rd person duality we have described here is to hold, then every phenomenon in subjective experience must have a dual description in objective physics. All those pleasant and unpleasant feelings, all of those wants, desires, pains, and needs must have a dual description not just in disassociated chemical reactions, but directly pertaining to a single quantum entangled system – the self. On the other side, quantum mechanics only cares about one thing and that is: energy states. Generally, systems when left alone “want” to transition to lower and/or more stable energy states, e.g. an electron in the hydrogen atom, in an excited state, will emit a photon to return to the lower, and more stable ground state. So, all the subjectivity in life must be understood in their dual quantum mechanical description as relating to the energy state of the organism’s quantum entangled system.

Too move forward we need to refer to the following paper “Quantum entanglement between the electron clouds of nucleic acids in DNA” by E. Rieper et. al. (2010) which shows that the electron clouds of neighboring nucleotides are entangled in DNA. Moreover, the entanglement helps to hold DNA together and allows it to achieve a more stable energy configuration. To get an intuitive idea of how this works, imagine electrons swirling laterally, some clockwise, some counter-clockwise, around a long double-helix strand of DNA, tugging on it, inducing instability in the chain. When entangled, the electrons clouds slip into a superposition of states so that each electron is half on the right side, and half on the left and balance each other out, sort of like orbiting in symmetric unison, stabilizing the molecule. Similarly, for oscillations along the length of the chain, if neighboring oscillations are out-of-synch, disharmonious, it induces instability in the biomolecule. If the oscillations are synchronized, entangled together, harmonious, like normal modes of oscillation in a classical spring, the energy state is lower stabilizing the molecule.

This is not a new trick of Nature, the nucleus of deuterium (an isotope of hydrogen) is comprised of a proton and a neutron that sit in an entangled superposition of states (an isospin singlet and triplet state, hat tip J. McFadden and J. Al-Khalili) so that they may bind closer to each other. This allows the system to form a more stable nuclear state. Suppose that life takes it one step further, though, and entanglement moves far beyond stabilizing individual atoms and biomolecules and instead entangles biomolecules all over the organism together. Intuitively, to imagine the meaning of all this entanglement, think of a large selection of biomolecules in your body vibrating coherently, synchronized – like a marching band marching together as One unit rather than a cluster of chaotic individuals – stabilizing the organism as entanglement in the electron clouds did for DNA. To provide a guess at how this entanglement could be sustained, we invoke the idea presented earlier that DNA may function not only as a source of genetic code but as a sort of antennae – an EPR photon source for entangling other biomolecules, constantly being driven, absorbing, down converting and re-emitting entangled photons (possibly THz) on femtosecond timescales (faster than decoherence rates) to keep the system entangled together as One unit. This entanglement then may have a stabilizing effect on the organism’s quantum entangled system, lowering and/or stabilizing its collective energy state. If this is true, then several natural, interesting and compelling explanations of dual subjective/objective phenomena emerge.

Stress

If we fire any particle, whether it be light (photons), electrons, or something relatively heavy like buckyballs (the molecule Buckminsterfullerene,  ) through a two-slit interferometer, we will see an interference pattern on the other side. All particles exhibit this wave-like quantum property regardless of size – it’s just that more massive ones have a much shorter wavelength and therefore a narrower interference pattern. When this experiment is performed, it is important, however, to remove all gas molecules from the interferometer chamber. Gas molecules interfere with the wave-like nature of the particles and will ruin the interference pattern. One way to think of it is: if anything in the environment acquires information about where the particle is, like which of the slits the particle passes through, the wave-like nature of the particle is disrupted and so is the interference pattern. If the information is only partial (i.e. not certain) the interference pattern is still visible but obstructed. Once information is obtained definitively showing which slit the particle went through, though, the interference pattern is completely destroyed (this is decoherence).

) through a two-slit interferometer, we will see an interference pattern on the other side. All particles exhibit this wave-like quantum property regardless of size – it’s just that more massive ones have a much shorter wavelength and therefore a narrower interference pattern. When this experiment is performed, it is important, however, to remove all gas molecules from the interferometer chamber. Gas molecules interfere with the wave-like nature of the particles and will ruin the interference pattern. One way to think of it is: if anything in the environment acquires information about where the particle is, like which of the slits the particle passes through, the wave-like nature of the particle is disrupted and so is the interference pattern. If the information is only partial (i.e. not certain) the interference pattern is still visible but obstructed. Once information is obtained definitively showing which slit the particle went through, though, the interference pattern is completely destroyed (this is decoherence).

Figure 21: A hologram of a mouse. Two photographs of a single hologram taken from different viewpoints by By Holo-Mouse.jpg: Georg-Johann Layderivative work: Epzcaw (talk) – Holo-Mouse.jpg, Public domain from Wikipedia.

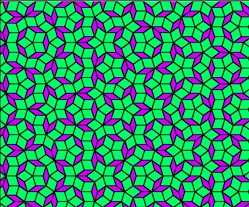

In the body of an organism, the establishment of entanglement throughout a macro-collection of biomolecules may be like projecting an internal hologram. Holograms are made from interfering coherent light (i.e. light of the same frequency, like a laser). In this case, the frequency is not visible light but probably frequencies like THz that interact with the vibrational states of biomolecules. The hologram is analogous to the interference pattern in the two-slit interferometer described above and is the means by which biomolecules throughout the body are entangled together. It allows biomolecules to be synchronized and brought to operate in unison – as One entity. So that it may, for instance, direct the growth of biomolecules such as microtubules (see “Live visualizations of single isolated tubulin protein self-assembly via tunneling current: effect of electromagnetic pumping during spontaneous growth of microtubule” (2014) by S. Sahu et. al.), or, control gene expression as in “Specificity and heterogeneity of THz radiation effect on gene expression in mouse mesenchymal stem cells” by B. S. Alexandrov et. al. (2013). It is also the means by which a lower and/or more stable energy state is achieved for the collective entangled biomolecules in the organism. When something disrupts this interference pattern, just like gas molecules disrupt the distinct interference bands in the interferometer, this is experienced by the organism as stress. This stress could be mental stress, environmental stress, or physical stress – like being sick. The root cause of the feeling, though, is something is disrupting the stable quantum energy state of the organism. The feeling of stress in the 1st person is dual to this quantum description in the 3rd.

Heart rate variability refers to variations in the heart’s rhythmic beat and is a strong indicator of overall health, including stress levels. See this video “Heart Rate Variability Explained” by J. Augustine (2007) for an introduction. The human heart is a rhythmic organ, intimately connected to the autonomic nervous system, and perhaps this is why it is particularly sensitive to stress – and thereby disruptions of the coherent hologram entangling the organism together. If you experience stress, you probably feel it most pronounced in your heart. In some cases, it may feel like your heart is in the grip of a vice, because the interference pattern synchronizing the whole body, and so important to the heart’s rhythmic operation, is being interfered with. See this paper for more on clinical studies relating heart rate variability to stress “The Effects of Psychosocial Stress on Heart Rate Variability in Panic Disorder” by K. Petrowski et. al. (2010).

There are studies connecting stress to negative clinical outcomes as it relates to all kinds of health issues including digestive, fertility, and urinary problems as well as a weakened immune system. However, evidence linking stress and cancer is still weak, possibly because its development is long term and there are other explanatory covarying factors such as smoking and alcohol consumption that are themselves behavioral responses to stress (see this page by the National Cancer Institute for more).

On the other hand, adaptation to stress is a tremendously positive experience. It feels great to overcome stress. We have explored previously how adaptation to stress evolutionarily (e.g. heat stress, starvation stress, oxygen stress) is a quantum transition or series of transitions to more stable quantum energy states (see “What if Quantum Mechanics is the Miracle Behind Evolution?“

for more) and leads to adaptation. This is made possible because of the vast amount of entanglement taking place in the organism. Psychophysical stress is no different. Adapting to stress is a quantum transition to a more stable energy state – a transition that clears up the interference pattern, clears up the hologram bringing stabilizing coherent entanglement to the organism.

Meditation

In the same way that stress disrupts entanglement throughout the body, causing instability in quantum energy states, we can explore the stabilizing effects of quantum entanglement in the mind through meditation. Meditation is about calming the mind. Practitioners unanimously speak of its benefits and the inner peace it bestows, even though proficiency requires diligent practice (also see here on the health benefits of meditation). In the words of yogi Dada Gunamuktananda, in his video “Consciousness — the Final Frontier” (2014), while Descartes famously said, “I think therefore I am”, Gunamuktananda’s response is “When I stop thinking then I really am!”.

Zeno’s paradox can be described as the action of keeping a quantum state localized by continually observing it. Meditation is the opposite. When we stop thinking we stop using our mind’s eye to direct our thoughts, we stop making choices, we stop subjecting ourselves to measurement. The absence of measurement allows quantum entanglement to grow, it allows quantum superposition to grow. The system must be driven and out-of-equilibrium to do this, yes, but that is the nature of biological systems. In our illustrative example of the mind’s eye it is not making a choice at end “B” or at end “A”, but is letting entanglement flow, the wave function is not collapsed, and superpositions of states expand. It allows the “brain solitons” to delocalize. This entanglement has a stabilizing effect on the organism’s energy state in the 3rd person and feels quite pleasant to you in the 1st. Awareness of the self increases substantially during meditation – a direct result of delocalization.

Another aspect of meditation is its focus on directing a sort of “energy” to certain parts of the body, e.g. an arm, a leg, or the forebrain, etc. The term “energy” is used, but this is not the same as the rigorously defined quantity of energy in physics. However, it does not seem crazy to consider these two terms related. Interestingly, we have seen that the Davydov soliton is thought to transport real energy up and down alpha-helical structures, and, these structures are ubiquitous throughout the body. The Davydov soliton quite possibly could play a critical role in the manifestation of the mind’s eye. And, in meditation, it is the mind’s eye that focuses energy to a specific region. Coincidence? If there was a quantum way to transport energy from one alpha-helical molecule to another, and thereby, from one region of the organism to another, this would make the association of the two descriptions of energy sensible. A quantum mechanism for such transport is not yet known, however, this may be possible through the holographic process of photon exchange we describe above.

If you feel like undertaking an experiment, try this one: the next time you find yourself going through security at an airport and you notice a millimeter wave (a.k.a. THz) scanning device, pay attention to your feelings during the scan. Prior to passing through the machine, try to meditate mildly, calm your mind and increase awareness of your mind and body. See if you can feel the momentary effect, a slight muddling of the hologram, of the THz scan on your quantum entangled self!

A regular meditation practice can lead to a rich set of inner experiences that explore the 1st/3rd person duality. Generally, you can find a plethora of experiences that are immensely pleasant (e.g. the resonance associated with Om in the mind feels incredible, delocalizing, loving, and like becoming One with your surroundings). In each case, the feeling of the experience is dual to a quantum mechanical transition – more stable states feel calm, pleasant, patient, love-like, unifying, while unstable states feel frantic, impatient, scattered, unpleasant.

Sex

Everyone unanimously agrees sex feels good. But why? We know that chemicals are released in the body during sex such as dopamine, prolactin, oxytocin, serotonin, and norepinephrine. But, why should these arbitrary chemicals feel great? Are they magic? We know the parasympathetic nervous system is involved, and we know studies of the brain show surprisingly little activity, but it feels like we are saying everything that is objectively correlated with sex, yet learning nothing about why it is a fantastic subjective experience! We’ve talked already about quantum mechanics caring about energy levels, and only that, so, suppose sex is about energy states. Specifically, the creation of a low-level energy state called a Fröhlich condensate. A Fröhlich condensate is a special quantum mechanical configuration of matter that allows a number of particles to entangle and become One thing, similar to the Davydov soliton. It is a precise solution to a form of the NLSE first proposed back in the 1960’s by physicist Herbert Fröhlich. When it forms, an exceptionally low energy quantum state is created and energy is dumped into it. Fröhlich condensates require a driving force to put energy into them – guess what that is? To get an intuitive feel for what a Fröhlich condensate is, imagine people doing “the wave” at a sporting event. This is the phenomenon where a wave of human-hand-raising circles the stadium. When it passes by your seating-section you stand up and throw your arms in the air. From afar, it looks like a smooth wave traveling around the stadium. Each person is an individual oscillator – you can stand up or sit down independently, but when you participate in the wave you synchronize your “oscillations” with the rest of the fans in the stadium and create a different kind of oscillation – a “wave of fans”. You have to expend energy to get it to form and these waves are only temporary but they are a lot of fun and provide a feeling of comradery among people. The hormones and neurotransmitters mentioned above are correlated with, and may even facilitate, the quantum entanglement that accompanies the Fröhlich condensate but they are not the source of the pleasure. Only energy state transitions connected to your quantum entangled self can do that. You interact with this new formed quasiparticle, and while it lasts, feel the pleasure of transitioning into a lower energy state. But, why does Nature do this? Is it just to create good feelings to induce procreation? Possibly. But, maybe, just maybe, the point is to kickstart the gametes with a big dose of quantum entanglement, give them some computing power to find their way on the journey ahead, a warm feeling of Oneness, a spark, to help them on their way, to pass on the gift of life…

Understanding

Why does it always feel good when we finally understand something? That’s not to say gaining knowledge always feel good, certainly there may be some things in life that we wish we could “un-know”. Understanding is different than knowledge. Understanding is about “getting it”. We’ve all had moments battling to understand an idea, wrestling with it, sometimes seemingly hopelessly, until, suddenly, we “get it”, it all comes together, and we feel great! What is going on in the 3rd person description that is dual to this consistently pleasant experience?

Figure 22: Another nonlinear Schrödinger equation (NLSE) solution – the Helmholtz Hamiltonian system. Definitely watch the video and get more information from Quantum Calculus.

Quantum neural networks are models designed to describe the behavior of neurons in the brain, while also implementing quantum effects, and leveraging quantum computing power. While no method has been found yet that fully integrates quantum with the classical aspects of neural computing, designs for quantum network models usually involve minimizing a so-called “energy” function that both reduces (a) the errors that the model makes and (b) minimizes the square of “synaptic” weights connecting neurons (see this paper “The Quest for a Quantum Network” by M. Schuld et. al. (2014) for more). The purpose of the first is clear – we want the model to learn how to make predictions without mistakes, while the second tends to diversify the model’s dependence across a plethora of “experts”. For example, if you are a juror in a court case, isn’t your confidence in your verdict greater if you base your decision on a diverse array of expert testimony, e.g. DNA evidence, ballistics, eye-witnesses, finger prints, smoking gun, etc., and they all agree? In the case of a neural network each “expert” is a neuron, but the idea is the same. This is a standard procedure in neural network learning (both classical and quantum) and has achieved substantial success in the machine learning community. Still, quantum mechanics only cares about real energy states, what does this “energy” function have to do with real energy? Conveniently, the function that is minimized in the quantum neural network (see Hopfield network) is equivalent to the real interaction energy in something called an Ising spin-glass model from physics. In other words, if the neurons and their connections in your brain can be regarded as an Ising model, then the quantum neural network learns by lowering the real energy embedded in its structure. Experimental evidence demonstrating that a biological neural network behaved like an Ising model was found in “Weak Pairwise Correlations Imply Strongly Correlated Network States in a Neural Population” by E. Schneidman et. al. (2006) studying salamander ganglion cells. This suggests that when you learn something, and that moment of understanding hits you, and a rush of joy comes over you, this is a quantum energy transition to a lower energy state. Of course, for the 1st/3rd person duality presented here to hold, your quantum-entangled-self would need to be entangled with the quantum network, functioning as One system – a fully quantum neural network design would need to be found.

Figure 23: Quantum Neural Network from Kinda Altarboush at slideshare.net

The learning function we described above is actually completely general – it appears in many machine learning algorithms that “maximize the margin” in learning the task of classification or regression. Margin maximization has an Occam’s Razor-like effect – it directly reduces the capacity of the model to “fit” the data, simplifying it, which in turn makes the model produce better predictions. When a model makes better predictions, it is natural to suggest the model “understands” the data better. It actually makes you wonder, are the rules of the quantum mechanics setup to encourage understanding in all kinds of systems throughout the Universe? In other words, in whatever physical systems something like an Ising model shows up, should we expect something that resembles understanding? If so, understanding is not something sought out by just humans, or even just high-level organisms, but it is intrinsic to the Universe itself! Suddenly, the quote below by physicist Brian Cox does not sound far-fetched at all:

“We are the cosmos made conscious and life is the means by which the Universe understands itself.” – Brian Cox, Physicist (~2011) Television show: “Wonders of the Universe – Messengers”

Self-Awareness and Gödel’s Incompleteness Theorem

There is a strange feeling to our self-awareness that is hard to put a finger on. Douglas Hofstadter in his Pulitzer prize winning book “Gödel, Escher, Bach” (1979) explored the idea that the source of consciousness is a kind of “strange loop” that embodies abstract self-referencing ability. The self-referencing fugues of J. S. Bach, the abstract, impossible, self-referential drawings of M. C. Escher, and the self-referential formal math bomb known as Gödel’s incompleteness theorem are examples that triangulate Hofstadter’s concept.

Figure 24: Drawing Hands by M. C. Escher, 1948, Lithograph

Today, self-awareness is widely accepted as a critical step to consciousness and has inspired artificial intelligence researchers to attempt to build self-aware robots. This video by Hod Lipson “Building Self Aware Robots” (2007) shows some of them. The robots start out not knowing what they themselves look like. As they try to execute motion tasks – like moving across the room – they become “aware” of whether they have arms and legs, how many, and, generally, what they look like – and improve their skills at locomotion. There are two parts to the robot’s autonomous command center, (a) the model of itself, which is learned on the fly from data collected in the act of moving, and (b) the locomotion command center which uses (a) to attempt to move across the floor. Each of these takes a turn in operating serially. So, the model (a) of itself updates using the latest collected data, then the locomotion module (b) uses that model to move which in turn generates new data, then feeds that back to update the model of itself (a), and so on. It is an iterative process of (a)->(b)->(a)->(b)->(a)… In the video, you can see the robot’s self-referential model evolve real-time to where it achieves an accurate 3-D representation of itself. While it is remarkable the learning that these robots do, they do not seem to be self-aware and conscious like we are. The module (b) has no understanding of module (a) and vice versa. No real awareness of it. The processing that takes places is still the tunnel-vision algorithmic processing of simple logical gates in a CPU. Still, it does seem like Lipson is on the right track – the self-awareness developed by these robots is clearly necessary for human consciousness, but it does not feel sufficient.

Figure 25: (Left) A “figure-eight” Mobius strip from here. (Right) A two-dimensional representation of the Klein bottle immersed in three-dimensional space. Image and caption via Wikipedia. Self-referencing quantum entanglement in the brain gives rise to the feeling of self-awareness.

R. Penrose in his book “The Emperor’s New Mind” (1989) argues that the difference between the human mind and the machine is that the mind can see mathematical truth in non-algorithmic problems. He said there are certain Gödelian statements that humans, because of their consciousness, can see their truth, but which Turing machines can never prove. D. Srivastava et. al. (2015) summed Gödel up well: “Gödel’s incompleteness theorem shows that any finite set of rules that encompass the rules of arithmetic is either inconsistent or incomplete. It entails either statements that can be proved to be both true and false, or statements that cannot be proved to be either true or false” from here. An example is the following: suppose F is any formal axiomatic system for proving mathematical statements, then there is a statement, called the Gödelian statement of F, we will label as G(F), equal to the following:

G(F) = “This sentence cannot be proved in F ”

The argument is that the system F can never prove the truth of this statement, but its truth is apparent to us. To see this, assume F could prove the statement, then we are lead to a logical contradiction. On the other hand, if F cannot prove the sentence then the sentence is true, again leading to a contradiction. Much has been made of Penrose’s argument with several notable counter arguments (a review is here) and no definitive resolution. Whatever the formal case may be with Penrose’s argument, true or not, it does seem that it does capture some essential elements of human consciousness – something “rings true” about it. Something feels strange about these kinds of self-referential loops and so a natural question is what kinds of quantum mechanical phenomena are dual to this subjective, albeit vague, 1st person description? We will suggest there are three essential aspects of quantum mechanics at play here:

I.) Quantum systems have the capacity for self-reference through quantum entanglement. The Gödelian statement above is obviously self-referential, but the serial self-referencing ability in Lipson’s robots does not seem to capture it. The crisscross entanglement we have described here, the self-referencing capacity of the NLSE, does. The difference is the wave function in this case loops back onto itself, but, because of entanglement, it is always One thing. Just as the Gödelian statement above must be evaluated as one mathematical statement. Lipson’s robots would need to entangle module (a) and module (b) together so they function as One thing.

II.) Quantum systems can be in a superposition of states, simultaneously spin up and spin down. Abstracted so spins represent a Boolean qubit, it is perfectly fine for a statement to be both true and false at the same time. Consider this version of the above Gödelian statement:

G(F) = “This statement is false”

If we try to iteratively evaluate this statement (like Lipson’s robots), we might start assuming the statement is true. Ok, so it is true that this statement is false, then we conclude the statement is false. Ok, if false, then it is false that this statement is false, then we conclude the statement is true. And, we are back to where we started. We can iterate through like this forever and never understand this statement. It will never converge to one answer. Only with a quantum entangled system can we model the essence of this statement, that it is true and false at the same time, in a superposition of states, never converging to one or the other. Since you are a quantum entangled system, that’s ok. You can model this statement. Consider this version:

G(F) = “I cannot prove this sentence”

This might be your Gödelian statement. You cannot prove it true or false without leading to a contradiction, but you can model it in your mind, you can understand it. Your mind is not a classical system governed by classical logic.

III.) The last quantum mechanical trait at play here is the ability to evaluate infinite loops instantaneously. In physics the problem of solving the Schrödinger equation is something that Nature does instantaneously even though it involves non-local information. For example, the solutions to the equation that describe a particle in a box look like the standing waves that would fit in that box. But suppose that box is inside another bigger box, and we suddenly remove one of the walls, Nature will instantaneously solve the new Schrödinger equation for the bigger box. These new solutions will look like standing waves in the bigger box. Another way of looking at this is from Feynman’s path integral perspective. The path integral formulation is equivalent to the Schrödinger equation, it’s just a different way to model the evolution of a quantum system. If we want to ask how does the state of some electron change with time (e.g. upon removal of a wall of the box) we can calculate infinitely many path integrals over all possible ways the system could evolve and sum the “amplitudes” up instantaneously and this would describe the time evolution of the system. Fortunately, we have calculus to integrate this infinite sum. In either case, Feynman or Schrödinger, the point is Nature considers infinite non-local information in quantum mechanics all the time. Now, consider the following issue with our first Gödelian statement. We can simply say the statement G(F) is now an axiom in a new stronger system called F’. Then have we plugged the hole created by Gödel’s statement? The answer is no, because we can always construct a new Gödelian statement for the new system F’:

G(F) = “This sentence cannot be proved in F’ ”

We could add a new axiom to F’ and create F”, but then we would just create a new Gödelian statement for F”, and so on forever (for a much more thorough treatment of this process of “jumping out of the system”, see Hofstadter’s Gödel, Escher, Bach)… If we operate with blinders on like a Turing machine, not seeing the lack of convergence at infinity, then we could iterate through this process forever. A Turing machine would have no way of knowing this iterative process would lead nowhere. But, in the right quantum system, we can count on Nature to evaluate this infinite loop for us, to solve the Schrödinger equation. Like finding the standing waves that fit in a sort of recursive neural circuit. We feel this when we think about this self-referential puzzle, our quantum minds are modeling this statement, we find a quantum solution, and we feel the true nature of the statement. Another way to think of this is just to consider the version of Gödel’s theorem in b.) above. We can iteratively evaluate it again and again, True, False, True, False, and it will never converge. This infinite series, also, can be described in some kind of quantum circuit in the mind. Nature does this infinite calculation for us, sums all the paths, all the amplitudes, and it is clear to us that it will never converge to a provable statement. The solution to the Schrödinger equation for the quantum circuit corresponds to a superposition of true and false. Just like Nature finding that an entangled superposition is the solution to Deuterium (energy minimum in the nucleus). It is formally undecidable classically, but representable in a quantum circuit.

Figure 26: These are just three of the infinitely many paths that contribute to the quantum amplitude for a particle moving from point A at some time t0 to point B at some other time t1.By Drawn by Matt McIrvin – Drawn by Matt McIrvin, CC BY-SA 3.0 from Wikipedia

Interestingly, I do not feel I can ever retain an understanding of Gödel’s theorem in long-term memory. Every time I recall it, I have to think-it-through a few times before I feel I understand it again. I wonder if this is the inability of a classical system, the neurons and their connections, to adequately describe Gödel’s statement. Perhaps I have to think-it-though each time to conjure up a quantum model in my mind. Only in my quantum mind, in short-term memory, can I adequately represent its self-referential nature, the simultaneous truth and falsehood of the statement.

“A bit beyond perception’s reach

I sometimes believe I see

That life is two locked boxes, each

Containing the other’s key”

– from here “Free Will – a road less traveled in Quantum Information Processing” by Ilyas Khan

General Qualia of the Senses

Red, yellow, blue, hot, cold, pain, tickle, joy, fear, hunger, (hat tip here for the suggestions) are all qualia of the senses. Why do these things feel as they do? In other words, why does yellow appear as the color yellow? Why should it appear as a color at all and not just feel as does sound? Both are waves. The frequencies of visible light are expressible in the trillions of hertz, far outside the audible range (20-20,000 hz). There would be no confusion as to the origin of the signal. Why not map both these inputs onto the same perception? Both systems are directional, you could just have an image in your mind of where any and all waves were coming from. Think of how a bat must “see” with its sonar, for instance. In the duality we have described here, all 1st person subjective phenomena must have a dual 3rd person quantum mechanical description as it pertains to a single quantum entangled system – the self. There must be a quantum signature to all these phenomena. The quantum effect of light absorption on the quantum entangled self must be qualitatively different than the quantum effect of sound detection. What is the difference in these quantum signature effects? Could they each induce different kinds of quasiparticles? Maybe light generates a spectrum of magnetons, while sound induces a spectrum of phonons? The feeling of color would then be the subjective dual to a magneton rippling through the quantum entangled self, while the feeling of sound, the dual to phonons vibrating thru.

Interestingly, the human eye has recently been shown to be able to detect single photons providing an example of how the brain is sensitive to quantum-level effects. Perhaps a useful way to think about the distinction between the macroscopic brain and quantum mind is in terms of classical versus quantum information. Classical information will tell you what’s in the world, where it is, what color it is, etc., but quantum information will tell you what it feels like.

Moral Responsibility

Philosophers since Aristotle have argued that free will is a necessary requirement for moral responsibility. For if we are not free to make choices then how can we be held accountable for our actions? Recent studies in the field of Quantum Cognition seek to understand if human behavior can be modeled using quantum effects. For example, when psychologists study how people play the game known as the Prisoner’s Dilemma, their behavior looks irrational. Players can maximize their “reward” in the game by “defecting” and ratting out their colleague (the other prisoner). But, they don’t do this. They tend to “cooperate” refusing to confess or blame their colleague for the crime and accepting an inferior game theoretic outcome. It is interesting though that if quantum effects are applied, and we assume quantum entanglement between the prisoners, the experimental results are predicted accurately. In the 1st person, this may manifest as our feeling of empathy for others. In this article “You’re not irrational, you’re just quantum probabilistic” by Z. Wang (2015), she provides an overview of quantum approaches to psychology. The trouble is that it is difficult to describe a physical system that can maintain entanglement between organisms. One guess starts with the fact that the brain does emit brain waves. If these brain waves were comprised of photons from an EPR source, and emitted in sufficient quantities, then it is conceivable that absorption of them by another organism could create cross-organism entanglement. A variation on this scheme could be related to eye contact. In this case eye contact would be somehow instrumental in the entanglement – e.g. incoming light is absorbed in one person’s eye by biomolecules (e.g. DNA), down converted, and re-emitted as lower frequency entangled EPR photons, and, finally, absorbed by the second person entangling the organisms together. The reason for singling out this particular mechanism as a means of entanglement is sourced in the powerful feelings that arise upon making eye contact, especially prolonged contact, with another individual (see this video for example). Another possibility is that the entanglement is present but exists entirely within the brain of each individual. For instance, imagine two piano players making music together. It feels joyful to each, the sounds resonating together, the surprise of what improvisation the other musician will add next, the harmony. But, in this description the entanglement can be seen as taking place between phonons within the auditory centers of each individual brain. Analogously, people empathizing with each other could be more like making a sort of ’emotional music’ together but the quantum entanglement resides entirely within each individual. Whatever the case may turn out to be, the feeling of empathy in the 1st person could be derived from some form of quantum entanglement in the 3rd. The simplistic models of quantum cognition would be an approximation to a much finer biological entanglement, but sufficient enough to predict the outcomes of these psychology experiments. Interestingly, “question order” is another psychological puzzle that looks irrational from a classical perspective but is explained naturally by quantum mechanics, although not relating to entanglement.

But, empathy alone would not seem to explain moral responsibility. Children start out exploring, doing the wrong thing and making mistakes, not because they are malicious but because they do not know better. It takes time to learn what the right thing to do is. It can be very difficult, even as an adult, to stay on the straight and narrow path of trying to do the right thing. Even the best of us have some missteps along the way. In this article “Aristotle on Moral Responsibility” (1995) author D. Hsieh sums Aristotle’s views up thusly:

“[Aristotle’s] general account of freedom of the will, coupled with [his] view that virtue consists in cultivating good habits over the long-term implies that ‘the virtues are voluntary (for we ourselves are somehow partly responsible for our states of character).’ (Aristotle, 1114b) We are responsible for our states of character because habits and states of character arise from the repetition of certain types action.”

Children choose what choices to make with their mind’s eye. They follow their preferences which are dual to quantum probabilities. Initially many of these preferences are exploratory – the whole world is new to them! Over time some of these decisions lead to negative outcomes, some lead to positive outcomes, some lead to short-term positive but long-term negative outcomes (local maxima). Neural connections are rewired in long-term memory as a result of these experiences which in turn affect the preferences of the child. Probably quantum computing is involved to solve some of these difficult and highly nonlinear connective questions, then solutions are relayed to long-term memory through the quantum tickertape of short-term memory – i.e. understanding what the “right thing to do” is. Adult guidance is certainly helpful too. Empathy affects lead to an understanding of “do unto others as you would have done unto you.” From these experiences, some advice, and sometimes some deep deliberation, morally responsible preferences are developed in the quantum-entangled-self. We are still free to make choices, still free to choose to contemplate heinous actions, but our preferences are there, and, through which, we are ultimately constrained by quantum probabilities.

Vice

Ok, we’ve said a lot about more stable energy states equating with pleasant feelings, but what about vices? Certainly, there are things in this world, temptations, desires, that feel pleasant, at least in the short term, but we don’t feel they are really good for us. What’s going on there? This common phenomenon would seem the feeling of transitioning quickly into an easily accessible energy state when another state, maybe a more arduous transition, but a substantially more stable state, was an alternative. It is trading a short-term pleasure for long-term stability. It is succumbing to temptation and not staying on the more stable straight and narrow path. Quantum mechanically it is transitioning into a potential energy well that is a quicker transition, but unstable while foregoing a transition into a potential energy well that is much more stable, albeit not as quickly accessible.

Love

The greatest of human emotions, it overlaps a bit with meditation. It also has something to do with two organisms entangling together as One. It is kept going by adapting to stress together, transitioning to a more stable energy state as One. The rest we leave to the reader to discover!

Consciousness

All of the above.

Conclusion

In conclusion, if we assume a 1st person perspective is present in fundamental particles, and we assume that some theories regarding maintaining quantum entanglement in biological systems are experimental proven, a compelling picture of life as a narrative of growing quantum entanglement emerges. As entanglement grows, a sense Oneness is present, basic short term memory develops, quantum computing power enables problem solving and creativity, then a mind’s eye, self-awareness and consciousness itself emerge. A compelling description of many subjective phenomena emerges once we view ourselves as a quantum entangled organism. Descriptions such as “the whole point of your body is to sustain quantum entanglement, that is, to keep you alive” seem apropos! Indeed, quantum entanglement feels as if it is the essence of life itself!

“Western civilization, it seems to me, stands by two great heritages. One is the scientific spirit of adventure — the adventure into the unknown, an unknown which must be recognized as being unknown in order to be explored; the demand that the unanswerable mysteries of the Universe remain unanswered; the attitude that all is uncertain; to summarize it — the humility of the intellect. The other great heritage is Christian ethics — the basis of action on love, the brotherhood of all men, the value of the individual — the humility of the spirit. These two heritages are logically, thoroughly consistent. But logic is not all; one needs one’s heart to follow an idea. If people are going back to religion, what are they going back to? Is the modern church a place to give comfort to a man who doubts God — more, one who disbelieves in God? Is the modern church a place to give comfort and encouragement to the value of such doubts? So far, have we not drawn strength and comfort to maintain the one or the other of these consistent heritages in a way which attacks the values of the other? Is this unavoidable? How can we draw inspiration to support these two pillars of western civilization so that they may stand together in full vigor, mutually unafraid? Is this not the central problem of our time?” – Richard Feynman, physicist

Author’s note: Feynman’s “Christian ethics” seems to refer to those morals that people of all spiritualities hold dear.

The END